What is Ad Hoc Testing?

Performing random testing without any plan is known as Ad Hoc Testing. It is also referred to as Random Testing or Monkey Testing. This type of testing doesn’t follow any documentation or plan to perform this activity. The testing steps and the scenarios only depend upon the tester, and defects are found by random checking.

Ad hoc Testing does not follow any structured way of testing and it is randomly done on any part of application. Main aim of this testing is to find defects by random checking. Ad hoc testing can be achieved with the testing technique called Error Guessing. Error guessing can be done by the people having enough domain knowledge and experience to “guess” the most likely source of errors.

This testing requires no documentation/ planning /process to be followed. Since this testing aims at finding defects through random approach, without any documentation, defects will not be mapped to test cases. Hence, sometimes, it is very difficult to reproduce the defects as there are no test steps or requirements mapped to it.

Types of ad hoc testing

Buddy Testing:

Two buddies mutually work on identifying defects in the same module. Mostly one buddy will be from development team and another person will be from testing team. Buddy testing helps the testers develop better test cases and development team can also make design changes early. This testing usually happens after unit testing completion.

Pair testing:

Two testers are assigned modules, share ideas and work on the same machines to find defects. One person can execute the tests and another person can take notes on the findings. Roles of the persons can be a tester and scribe during testing.

Monkey Testing:

Randomly test the product or application without test cases with a goal to break the system.

When to execute Ad hoc Testing?

Ad-hoc testing can be done at any point of time whether it’s beginning, middle or end of the project testing. Ad hoc testing can be performed when the time is very limited and detailed testing is required. Usually adhoc testing is performed after the formal test execution. Ad hoc testing will be effective only if the tester is having thorough knowledge of the system under Test.

This testing can also be done when the time is very limited and detailed testing is required.

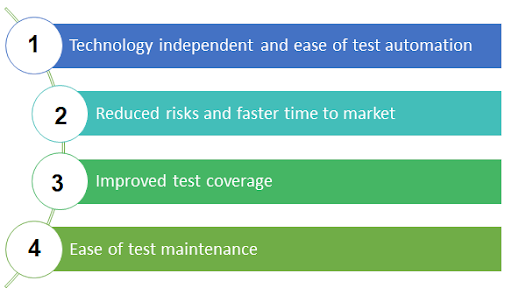

Ad Hoc Testing does have its own advantages:

A totally informal approach, it provides an opportunity for discovery, allowing the tester to find missing cases and scenarios which has been missed in test case writing.

- The tester can really immerse him / her in the role of the end-user, performing tests in the absence of any boundaries or preconceived ideas.

- The approach can be implemented easily, without any documents or planning.

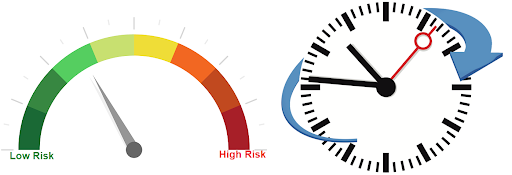

That said, while Ad Hoc Testing is certainly useful, a tester shouldn’t rely on it solely. For a project following scrum methodology, for example, a tester focused only on the requirements and who performs Ad Hoc testing for rest of the modules of the project(apart from the requirements) will likely ignore some important areas and miss testing other very important scenarios.

When utilizing an Ad Hoc Testing methodology, a tester may attempt to cover all the scenarios and areas but will likely still end up missing a number of them. There is always a risk that the tester performs the same or similar tests multiple times while other important functionality is broken and ends up not being tested at all. This is because Ad Hoc Testing does not require all the major risk areas to been covered.

Drawbacks:

- As this type of testing does not follow a structured way of testing and no documentation is mandatory, the main disadvantage is that the tester has to remember all scenarios.

- The tester will not be able to recreate bugs in the subsequent attempts, should someone ask for issue reproducibility.

Ad hoc testing gives us knowledge on applications with a variety of domains. And within a short time one gets to test the entire application, it gives confidence to the tester to prepare more Ad hoc scenarios, as formal test scenarios can be written based on the requirement but Ad hoc scenarios can be obtained by doing one round of Ad hoc testing on application to find more bugs, rather than through formal test execution.

You may like to read

Adhoc Testing vs Exploratory Testing

Ad-hoc Testing: Tester may refer existing test cases and just pick a few randomly to test the application. It is more like hit and trial to find a bug. In case you find one you have an already documented Test Case to fail here.

Exploratory testing: This is a formal testing process that doesn’t rely on test cases or test planning documents to test the application. Instead, testers go through the application and learn about its functionalities. They then, use exploratory test charters to direct, record and keep track of the exploratory test sessions observations.

| Adhoc Testing | Exploratory Testing |

| Adhoc testing is considered to be more informal in nature. | Exploratory testing is a formal form of testing. |

| Adhoc testing require having enough domain knowledge and experience | Exploratory Testing is side by side process of exploring and learning the application |

| Adhoc testing doesn’t need any plan for performing any activity. | Before performing exploratory testing, tester need to spend some time on identifying the area of attack (where possibility for getting bugs are more) including the scope of testing and detailing the goals that need to be completed during specified time. |

| There is no time limit for performing the Adhoc testing. Major focus is on finding the bug. | It is a time basis approach to perform this testing which helps in managing and tracking. It includes a dedicated time-boxed testing session with no interruption from email, phone, messages etc. |

| There is no documentation required in this testing, neither prior to conducting the test, not after an error or bug is found while testing. | Here, maintaining proper documentation becomes mandatory for keeping record and track of the exploratory test sessions observations. |

| Ad-Hoc testing can only be executed once as no documentation is maintained and an error cannot be reproduced. | Exploratory testing involves learning the obtained test results and creating new solutions to resolve the issues. |

| This testing works on negative testing approach | This testing works on both, negative as well as positive comments, however, the focus lies more on a positive testing approach. |

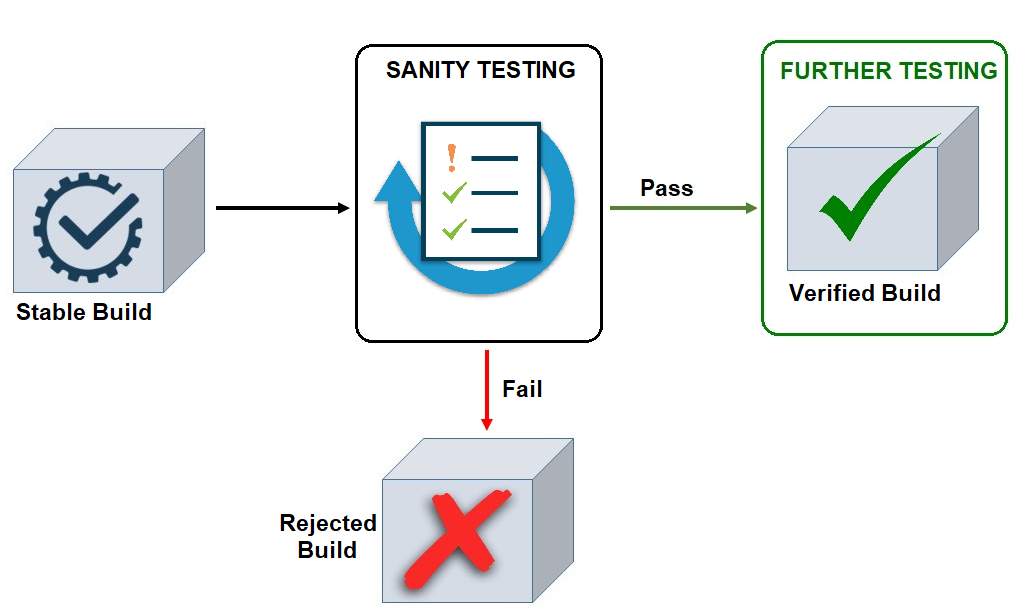

Performing Testing on the Basis of Test Plan

Test cases serve as a guide for the testers. The testing steps, areas and scenarios are defined, and the tester is supposed to follow the outlined approach to perform testing. If the test plan is efficient, it covers most of the major functionality and scenarios and there is a low risk of missing critical bugs.

On the other hand, a test plan can limit the tester’s boundaries. There is less of an opportunity to find bugs that exist outside of the defined scenarios. Or perhaps time constraints limit the tester’s ability to execute the complete test suite.

So, while Ad Hoc Testing is not sufficient on its own, combining the Ad Hoc approach with a solid test plan and Exploratory testing will strengthen the results. By performing the test per the test plan while at the same time devoting resource to Ad Hoc testing, a test team will gain better coverage and lower the risk of missing critical bugs. Also, the defects found through Ad Hoc testing can be included in future test plans so that those defect prone areas and scenarios can be tested in a later release.

Additionally, in the case where time constraints limit the test team’s ability to execute the complete test suite, the major functionality can still be defined and documented. The tester can then use these guidelines while testing to ensure that these major areas and functionalities have been tested. And after this is done, Ad Hoc testing can continue to be performed on these and other areas.

Conclusion:

The advantage of Ad-hoc testing is to check for the completeness of testing and find more defects than planned testing. The defect catching test cases are added as additional test cases to the planned test cases.

Ad-hoc Testing saves lot of time as it doesn’t require elaborate test planning , documentation and Test Case design.

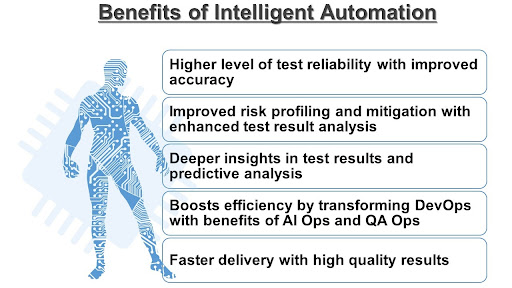

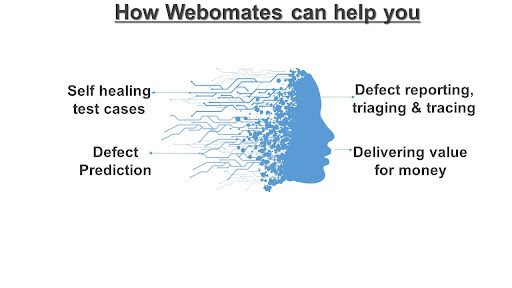

At Webomates, we have applied this technique in multiple different domains and successfully delivered with a quality defects. We specialize in taking many different software creators’ builds across domains, on 18 different Browser/OS/Mobile platforms and providing them with defects in 24 hours or less. If you are interested in a demo click here Webomates CQ